A couple of days ago, Dimitri published a blog post, Analyzing Percona’s TPCC-like Workload on MySQL 5.5, which was a response to my post, MySQL 5.5.8 and Percona Server: being adaptive. I will refer to Dimitri’s article as article [1]. As always, Dimitri has provided a very detailed and thoughtful article, and I strongly recommend reading if you want to understand how InnoDB works. In his post, Dimitri questioned some of my conclusions, so I decided to take a more detailed look at my findings. Let me show you my results.

Article [1] recommends using the innodb_max_dirty_pages_pct and innodb_io_capacity parameters to get stable throughput in MySQL 5.5.8. Let’s see what we can do with them. Article [1] also advises that innodb_log_file_size is not important for stable throughput.

For my tests, I again used the Cisco UCS C250 box with 346GB of RAM , and I ran the tpcc-mysql benchmark with 500W (about 50GB of data) on the FusionIO 160GB SLC card. For innodb_buffer_pool_size I used 26GB to represent about a 1/2 ratio of buffer_pool_size to data.

For the initial tests, IÂ used MySQL 5.5.8 (the tar.gz binary from dev.mysql.com), and for the other tests I used Percona Server based on 5.5.8. Addressing a complaint to my previous post, I am sharing the percona-server-5.5.8.tar.gz I used for testing, but please note: It is very pre-beta and should not be used in production. You can download it from our TESTING area.

In order to test different settings in a short period of time, I used 30-minute runs, which may not be long enough to see the long-term trend, but we will see the effects anyway. The full command line to run the test is:

1 | tpcc_start localhost tpcc500w root "" 500 32 10 1800 |

. For better understanding the results for each run, I will show different graphs:

- benchmark throughput – This is New Order Transactions per 10 seconds.

- dirty page – This graph will contain the percentage of dirty pages in the InnoDB buffer pool. This value is calculated from the output of using this formula:Â1mysqladmin ext -i10. This is the exact formula that InnoDB uses internally to estimate current innodb_dirty_pages_pct.1(100*Innodb_buffer_pool_pages_dirty)/(1+Innodb_buffer_pool_pages_data+Innodb_buffer_pool_pages_free)

- checkpoint age – This is a value in MB or GB and shows what amount of the space in innodb_log_file corresponds to changed pages in the buffer pool. You can compute this value asfrom SHOW ENGINE INNODB STATUS.1Log sequence number - Last checkpoint at

Here are the InnoDB settings for the initial run. Later I will change them in searching for optimal values.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | innodb_file_per_table = true innodb_data_file_path = ibdata1:10M:autoextend innodb_flush_log_at_trx_commit = 2 innodb_flush_method = O_DIRECT innodb_log_buffer_size = 64M innodb_buffer_pool_size = 26G innodb_buffer_pool_instances=16 innodb_log_file_size = 2000M innodb_log_files_in_group = 2 innodb_read_io_threads = 16 innodb_write_io_threads = 16 innodb_purge_threads=1 innodb_adaptive_flushing=1 innodb_doublewrite=1 |

Please note that initially I used the default value for innodb_max_dirty_pages_pct, which is 75, and the default value for innodb_io_capacity, which is 200. I also enabled innodb_doublewrite. As will appear later, it is quite a critical parameter.

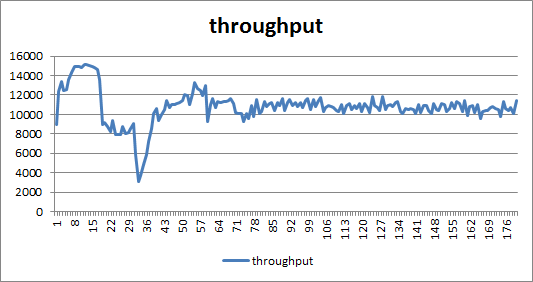

So, the results for the initial run, using MySQL 5.5.8:

Let me explain the second graph a little. I put checkpoint age and dirty pages percentage on the same graph to show the relationship between them. Checkpoint age is shown by the red line, using the left Y-axis. Dirty pages are shown by the blue line, using the right Y-axis.

As expected, throughput jumps up and down. Checkpoint age is stable and is about 2854.02 MB. Checkpoint age is the limiting factor here, as InnoDB tries to keep the checkpoint age within 3/4 of the limit of the total log size (total size is 2000MB*2).

The 15-minute average throughput is 59922.8 NOTPM.

Okay, now following the advice in article [1], we will try to limit the percentage of dirty pages and increase I/O capacity.

So, I will set innodb_max_dirty_pages_pct=50 and innodb_io_capacity=20000.

As we see, throughput is getting into better shape, but is far from being a straight line.

If we look at the checkpoint age/dirty pages graph, we see that the dirty pages percentage is not respected, and is getting up to 70%. And again we see the limiting factor is checkpoint age, which is getting up to 3000MB during the run.

The 15-minute average result for this test is 41257.6 NOTPM.

So, it seems we are not getting the stable result of article [1], and the difference is the doublewrite area. Doublewrite activity actually adds significant I/O activity. Basically, it doubles the amount of writes :), as you see from its name. So, let’s see what result we have when we disable doublewrite; that is, set innodb_doublewrite=0.

Now, although throughput is not a perfect line, we see a totally different picture for dirty pages and checkpoint age.

The dirty page maximum of 50% is still not respected by InnoDB, but the checkpoint age drops far below the 3000MB line. It is now on about the 1500MB line.

The 15-minute average result for this test is 63898.13 NOTPM. That is, by disabling the doublewrite area, we improved the result 1.55x times.

As it seems hard for InnoDB to keep 50% dirty pages, let’s try 60%.

Here is the run with innodb_max_dirty_pages_pct=60.

Okay, now we finally see throughput more or less flat. The dirty page percentage is kept at the 60% level, and checkpoint age is at the 2000MB level; that is, not bounded by innodb_log_file_size.

The 15-minute average result for this test is 64501.33 NOTPM.

But we still have DOUBLEWRITE=OFF.

Since now we are limited by innodb_max_dirty_pages_pct, what will be the result if we try to increase it to 70% ?

It seems 70%Â is too big, and now we again hit the limit set by innodb_log_file_size.

The 15-minute average result for this test is 57620.6 NOTPM.

Let me summarize so far. With innodb_doublewrite disabled, we have stable throughput only with innodb_max_dirty_pages_pct=60. Setting this value to 50 or 70 gives us dips in throughput, though for different reasons. In the first case, InnoDB is unable to maintain the 50% level; in the second we are limited by the capacity of REDO logs.

So, what do we get if we again enable innodb_doublewrite, but we now set innodb_max_dirty_pages_pct=60?

This is a bummer. Throughput again jumps up and down. The dirty pages percentage is not respected, and InnoDB is not able to maintain it. And checkpoint age is back to 3000MB and again limited by innodb_log_file_size.

The 15-minute average result is 37509.73 NOTPM.

Okay, so what if we try an even smaller innodb_max_dirty_pages_pct, setting it to 30? (I use a 1-hour run in this case.)

I can’t say if the resullt should be considered stable. There are still a lot of variations.

The 15-minute average result is 37039.73 NOTPM.

Let’s try an even larger decrease, setting innodb_max_dirty_pages_pct=15.

This seems to be the most stable line I can get with MySQL 5.5.8.

The 15-minute average result is 37235.06 NOTPM.

This allows me to draw a conclusion which partially concurs with the conclusion in article [1]. My conclusion is: With doublewrite enabled, you can get a more or less stable line in MySQL 5.5.8 by tuning innodb_max_dirty_pages_pct and innodb_io_capacity; but the limiting factor is still innodb_log_file_size.

To prove it, I took Percona Server based on 5.5.8 and ran it in MySQL mode (that is, using adaptive_flushing from InnoDB and with the adaptive_checkpoint algorithm disabled), but with giant log files. I used a log file of 8000MB*2, just to see what the maximum checkpoint age is.

Success! With a big log file, we are getting stable throughput. Checkpoint age jumps up to 3900MB line, but the dirty page percentage is not kept within the 60% line, going instead up to the 70% line limit. That is, to get this stable throughput, we need a total log file size of about 3900MB + 25% = 5300MB.

The 15-minute average result for this test is 48983 NOTPM.

But what about innodb_max_dirty_pages_pct; can we get better results if we increase it? It’s not respected anyway.

Let’s try the previous run, but with innodb_max_dirty_pages_pct=75.

The 75% dirty pages line is at a stable level now, but something happened with throughput. It doesn’t have holes, but there is still oscillating. Checkpoint age is quite significant, reaching 7000MB in the stable area, meaning you need

about 9000MB of log space.

The 15-minute average result for this test is 55073.06 NOTPM.

What can be the reason? Let’s try a guess: flushing neighborhood pages.

Let’s repeat the last run, but with innodb_flush_neighbor_pages=0.

Okay, we are back to a stable level. Checkpoint age is also back to 3000MB, and dirty pages are stable as well, but getting to 77%. I am not sure why it is more than 75%. It is a point for further research, but you are probably tired from all these graphs, as am I.

The 15-minute average result for this test is 52679.93 NOTPM. This is 1.4x better than we have with the stable line in MySQL 5.5.8.

But, finally, let me show the result IÂ got running Percona Server in optimized mode:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | innodb_buffer_pool_size = 26G innodb_buffer_pool_instances=1 innodb_log_file_size = 8000M innodb_log_files_in_group = 2 innodb_read_io_threads = 16 innodb_write_io_threads = 16 innodb_io_capacity=500 innodb_max_dirty_pages_pct = 60 innodb_purge_threads=1 innodb_adaptive_flushing=0 innodb_doublewrite=1 innodb_flush_neighbor_pages=0 innodb_adaptive_checkpoint=keep_average |

The 15-minute average result is 73529.73 NOTPM.

The throughput is about 1.33x better than in “MySQL compatible mode”, though it requires 10500MB for checkpoint age; that is, 14000MB of log space. And, the Percona Server result is ~2x better than the best result I received with MySQL 5.5.8 (with innodb_doublewrite enabled).

In summary, my conclusion is: You can try to get stable throughput in MySQL 5.5.8 by playing with innodb_max_dirty_pages_pct and innodb_io_capacity and having innodb_doublewrite enabled. But you must have the support of big log files (>4GB) to help increase throughput.

Basically, by lowering innodb_max_dirty_pages_pct, you are killing your throughput. When you disable innodb_doublewrite, you can get stable throughput if you are lucky enough to find a magic innodb_max_dirty_pages_pct value. As you saw in the results above, 50 and 70 are not good enough, and only 60 gives stable throughput.

(Post edited by Fred Linhoss)

Vadim,

Great analyzes ! I would mention one more thing here – tuning is hard and expensive and if we can get the system to self balance it is best. I think parameters as innodb_max_dirty_pages_pct and innodb_io_capacity is something which should not be explicitly set but system should be able to balance itself to get a best stable performance.

Now while we’re playing with benchmarks we need to understand this is only a game and real production systems do not stable workload in most cases. The number of users, types of transactions they run, batch scripts which are started in background always change the system and the optimal values will depend on the workload a lot and so be likely very different.

Vadim,

I do the same tpc-c test, and the result is close to yours.

http://www.facebook.com/note.php?note_id=135971213130668

Could you offer a link to the raw test data? I wish to compare several groups of test data in one gragh. Thanks a lot.

Hi Vadim,

thanks for sharing! – it’s a part of analyze I’ve expected to see within your initial article ;-)) and I’m also happy you got a better performance with MySQL 5.5.8 than presented before :-))

regarding doublewrite: I’ll test this case to see the impact.. – “normally” there should be near no impact on SSD storage.. – but let’s see..

regarding redolog size: I’m still not sure there is any “limit” coming due 4G redo logs.. – well, for sure, having 1TB redo logs and say 512GB buffer pool you may run on fly for hours and still just keeping a minimal writes – only redo log sequential writes, and you’ll have the fastest possible performance! – however while having a highly performant storage there should be not too much difference if you’re writing 20MB/s or 200MB/s in background..

also interesting that flushing of neighbor pages was several times tested in the past and was still not accepted as performance was worse with it..

Well, I still have yet more questions than answers..

Will keep you updated :-))

Rgds,

-Dimitri

Hi Vadim,

thanks for sharing! – it’s a part of analyze I’ve expected to see within your initial article ;-)) and I’m also happy you got a better performance with MySQL 5.5.8 than presented before :-))

regarding doublewrite: I’ll test this case to see the impact.. – “normally” there should be near no impact on SSD storage.. – but let’s see..

regarding redolog size: I’m still not sure there is any “limit” coming due 4G redo logs.. – well, for sure, having 1TB redo logs and say 512GB buffer pool you may run on fly for hours and still just keeping a minimal writes – only redo log sequential writes, and you’ll have the fastest possible performance! – however while having a highly performant storage there should be not too much difference if you’re writing 20MB/s or 200MB/s in background..

also interesting that flushing of neighbor pages was several times tested in the past and was still not accepted as performance was worse with it..

Well, I still have yet more questions than answers..

Will keep you updated.. :-))

Rgds,

-Dimitri

Dimitri – I disagree that the doublewrite buffer won’t limit performance. I can produce results showing that it does and from reading the code I believe my results. When the doublewrite buffer is enabled the write pattern is:

1) find pages to write

2) do large sequential write to doublewrite buffer for those pages

3) fsync that large sequential write

4) use background IO threads to do random writes in place

5) fsync the random writes

Note that step #5 is done even when O_DIRECT is used. See http://bugs.mysql.com/bug.php?id=45892. This is fixed in the Facebook patch. I also have results at http://mysqlha.blogspot.com/2009/06/buffered-versus-direct-io-for-innodb.html to show that the bogus fsync hurts performance.

But the big issue is that this won’t use all available IO capacity as there is a pattern here of one large write -> many small writes -> one large write …

And that one large write is likely to use a small number of disks given a reasonable RAID stripe. The other disks won’t be doing any writes at that time.

Mark,

the solution (workaround) I’ve found in the past for doublewrite buffer was to move it into a separate storage/disk (http://dimitrik.free.fr/blog/archives/2009/09/mysql-performance-io-optimization-innodb.html) – in this case you don’t mix its fast seq.writes with other I/O operations (specially random reads)..

However seems the things are more complicated now.. – hope to post some results soon.

Rgds,

-Dimitri

I agree with Peter. I think the goal should be a system that balances itself against the resources available to it, not over-committing to do some work that the hardware is not capable of doing. Database administrators shouldn’t have to set a complex mixture of two or three tuning parameters to exactly the right magic values for good performance. It’s impossible to do, because the workload is going to vary. The database needs to adjust as the workload varies. Configuration parameters that are set at a certain value can’t adjust with a changing workload. If there were a real-world application that ran something like the workload in these tests, but with real users driving it and things like backups and cron jobs running every now and then, we’d see stable performance at some times of the day, and wild oscillations at other times. That’s just not good enough.

I’m not saying it’s easy to achieve, though 🙂

Baron, Peter, Vadim,

probably you’ve understood me wrong – I’m not against the self-tuning, I’m pretty sure that it’ll be a goal within all future InnoDB improvements ;-)) (then don’t know if it’s really a part of a dream of every DBA.. – but it’s another discussion ;-))

my point was quite simple: if you’re doing some testing and comparing different solutions, please do it honest and try to reach the best possible performance on each one! :-)) (and it’s what I’m doing when testing XtraDB, and this post in the past was a good example: http://dimitrik.free.fr/blog/archives/2009/12/mysql-performance-breaking-limits-with-xtradb.html :-))

Rgds,

-Dimitri

Dimitri,

My writes are fast courtesy of HW RAID. They just aren’t fast enough and add latency. On a system with too much pressure on the buffer pool threads that run user transactions pause to schedule writes for dirty pages at the end of the free list and those writes suffer from the latency added by the doublewrite buffer write. This would be less of a problem if the main background thread did that work.

Dmitri,

Yes I agree with you. We should have found the combination offering best performance for MySQL 5.5.8 for given workload. At the same time if it requires “fine tuning” to get these numbers it is a downside as it is not always possible to achieve such tuning.