There are many ways to slice and aggregate metrics of activity on a system such as MySQL. In the best case, we want to know everything about the system’s activity: we want to know how many things happened, how big they were, and how long they took. We want to know precisely when they happened. We want to know resource consumption, instantaneous status snapshots, and wait analysis and graphs. These things can be expensive to measure, and even more importantly, they can require a lot of work to analyze. And sometimes the analysis is only valid when very stringent conditions are satisfied. What metrics can we gather that provide the most value for the effort?

There’s a relationship known as Little’s Law, sometimes also referred to as the fundamentals of queueing theory, that lays out some simple properties between various metrics. One of the properties of Little’s Law is that it holds regardless of inter-arrival-time distributions and other complex things. And you can build upon it to create surprisingly powerful models of the system.

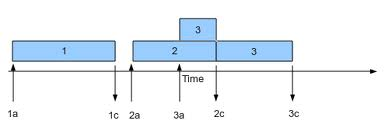

Let’s begin with a query. It arrives, executes for a bit, and then completes.

Then some time elapses, and another query arrives, executes, and completes.

To add the minimal amount of extra complexity, let’s say that another query arrived while query 2 was executing, and completed after query 2 completed. Now we have the following picture, fully annotated. The arrivals and completions are denoted by “a” and “c”.

If we start at the left end of the Time arrow, and call it the beginning of the observation period, and observe the system until the right end of the arrow, we have our observation interval. If we travel from left to right along the arrow, we can count the system’s “busy time” as the time during which at least one query is running. This is the period from 1a to 1c, plus the period from 2a to 3c.

It’s easy to compute this by just creating two counters and a last-event timestamp. Every time there is an arrival or completion, look at the counter of currently executing queries. If it is greater than 0, add the time elapsed since the last arrival or completion. Then increment the counter of currently executing queries if the current event is an arrival, and decrement it if it’s a completion. Finally, set the last-event timestamp to the current time.

Now, if we add another counter, we can get the “weighted busy time.” This counter is similar to the busy time, but instead of just adding the time elapsed since the last event, we multiply the time elapsed by the number of currently executing queries and add it. This is effectively the “area under the curve” — the blue-colored regions in the picture above.

Finally, we can simply count the number of arrivals or completions. It doesn’t matter which; Little’s Law is based on a long-term average, where the two are equal.

All together, we have the following four fundamental metrics:

- The observation interval.

- The number of queries in the interval.

- The total time during which queries resided in the system — the “busy time.”

- The total execution time of all queries — the “weighted time.”

We can maintain these counters in the system, and sample them at will by simply looking at the clock and writing down the three counters at the start and end of whatever observation period we choose.

What is this good for? We can use these together with Little’s Law to derive these additional four long-term average metrics:

- Throughput: divide the number of queries in the observation interval by the length of the interval.

- Execution time: divide the weighted time by the number of queries in the interval.

- Concurrency: divide the weighted time by the length of the interval.

- Utilization: divide the busy time by the length of the interval.

This is extremely powerful. These are the key metrics we need to model scalability with the Universal Scalability Law, perform queueing analysis, and do capacity planning. And we obtained all of this without needing to analyze anything complicated at all. This is just addition, subtraction, multiplication, and division of some constantly increasing counters!

There’s more. We derived our four long-term average metrics from the four fundamental metrics, and we derived those in turn from the arrivals and completions. In a future article, I’ll show you what else we can learn by observing arrivals and completions. And I’ll present on this at Percona Live, where I’ll show you how to tie it all together quickly to answer real-world questions about practically any system, whether it’s instrumented with the counters I mentioned or not, with shockingly little effort.

Any thoughts on providing a database table and accompanying procedures/triggers to begin logging the four fundamental metrics so that readers may implement this and derive the additional four long-term average metrics?

These metrics aren’t available in MySQL at this time. Later I’ll show how to measure them externally.

One thing I always liked about the Oracle metrics is that, in addition to telling me the concurrency level for a particular timeslice, they also gave the *what the queries were doing*.

So what I’d like to be able to pull from mysql is (logically at least):

For the timeslice 12:00 -> 12:00:01

Queries in CPU wait = 3

Queries in IO wait = 2

Queries waiting on Query Cache put = 1

Queries waiting on Mutex = 1

Total concurrency = 7

Baron and Tom,

This is a very subtle topic and it’s very easy to get confused (as I possibly am). And going back and forth in a blog probably won’t help matters, either—a whiteboard could easily get obliterated very quickly.

That said, I believe Baron is trying to construct something out mysql performance counters because not much is already available (e.g., not as much as in Linux). If that’s the motivation, that’s fine, but one has to be extremely careful when working with such low level data—especially distinguishing what the RDBMS knows from what the O/S knows.

Now that I look more closely, I’m not sure I agree with the diagrammatic description. One of my low level metrics is “number” or count. As Tom says, these are sampled events. The sample rate is set as a RDBMS kernel parameter (I don’t know the official terminology for mysql). Let’s take query throughput (X) as a concrete example. Each query event is accumulated in the concomitant counter, i.e., that counter gets incremented as each query event appears in the system. Nothing is known about *when* that counter got incremented. Moreover, you only find out what the count (C) is when you actually look at it—sample it.

The time-stamp corresponding to when you look at the query counter is determined, not by an RDBMS parameter but, by the measurement period (T) that you (the user, sysadm) choose in whatever performance tool you are using. *Period* here means *interval*: the sampling interval. So, the counter might be getting updated every second by the RDMS, whereas you might be sampling it only every 10 minutes (600 seconds). Once set, the sample period T can recur like the clicks of a metronome. That’s the basis of all good measurement.

The query throughput is the accumulated query count during the measurement period T (i.e., X = C/T the number of completions per unit time). The measurement period is an interval of length (T2 – T1), and so is the count because it is the difference b/w the count “now” (C2) and the count the last time you looked (C1). So more technically, the query throughput is given by X = (C2 – C1) / (T2 – T1) and is therefore a *time-averaged* quantity formed by the ratio of those intervals.

Thus, in Baron’s diagram, if you happened to sample at measurement endpoint T2 = “2c” you would see 3 events in the counter, not 2; even though event “3″ has only just started. Since you don’t know when the RDMBS is updating the counter, you can’t know that information. If you sampled at T2 = “3c” you would still get C2 = 3 because the counter is accumulator.

The kind of formal statistics that Tom writes about is typically associated with post-processing of performance data that goes on at a much higher level than what is being discussed here and usually much later on, although not always (cf. load average == EMA http://www.perfdynamics.com/papers.html#tth_sEc23). That’s why I prefer the term “performance metrics” over the oft-used “performance statistics” to remind myself that we first must have reliable and meaningful base metrics or measurements before we can do anything with them, such as compute other derived metrics or statistics.

Tom,

The “area” I’m referring to is that while a query is running, it contributes 1 to the number of requests resident in the server, so its height is 1. I think the difficulty with finding a good descriptive phrase for what I call the weighted time is that I’m defining it carefully to include requests that are still resident in the server, whereas I’ve seen others speak only about requests that have completed, e.g. they don’t recognize a request until they have “the whole thing.”

I called the time “counters” counters, because they are often measured in whole numbers of milliseconds or microseconds in real implementations. But in theory these should be real numbers, not integers, and the dimension should be seconds. You’re right, they are not counters, they are durations.

Your point about losing information when you aggregate into counts is right on. In the next episode I’ll be discussing arrival and completion events, which have a timestamp but no duration, from which all of this is derived.

I’ve saved your PDF reference in the same to-read folder as a bunch of other stuff related to this

Baron (and Neil),

Concering the expression “weighted time”: If I understand I correctly, you are describing the blue regions in the 3rd figure. Isn’t this just the cumulative time (i.e., the sum of all task durations)? I don’t know if “area under the curve” is a good statement, since intervals have “length” but no “height”. Perhaps you mean something else…

Also, you use the term “counter” yet reference a duration. If your counter is accumulating durations, I would shy away from calling it a counter. However, that’s really your choice.

This is probably unrelated, but I’ll throw it in: I consider a “count” to be an unofficial descriptive statistic. A count summarizes the number of events over an interval. Much information about the details of the timing of the events is lost when counting (e.g., did all of the events occur at the same time? or were they spread out over the interval?). For more, reference the end of section 2.5 of http://www.cmg.org/measureit/issues/mit75/m_75_3.pdf. Since I’m referencing myself, don’t assume anyone agrees with me!

Concerning 2 vs. 3 types of metrics: I haven’t thought about it enough, but I’m wondering if distinguishing between “intervals” and “rates” IS important. You compute statistics (e.g., mean, stand. dev.) differently for the two (same ref. as above).

Right. I simply haven’t done enough studying to know what’s right and what’s wrong in these matters sometimes.

By the way, I think your 3 fundamental metrics are 3 fundamental *types* of metrics, not the metrics themselves. I wrote something a little bit similar once upon a time, albeit much more tongue-in-cheek. My point was that counters aren’t enough instrumentation — I wanted to know size and duration of operations too. (In case you don’t know, MySQL is historically full of counters, and not much else.) That post is at http://www.xaprb.com/blog/2010/03/30/4-ways-that-instrumentation-is-like-sex/

Take a look at my slides on this topic, which I just posted to my blog http://perfdynamics.blogspot.com/2011/05/fundamental-performance-metrics.html They show how to do dimensional analysis and also how it relates to Little’s law (both of them).

I would strongly caution against using metrics defined by Linux or Unix kernel hackers (or any other hackers, for that matter). I’ve seen too many examples of (a) bastardized nomenclature, not to mention (b) broken code that incorrectly computes said metrics.

My best example of (a) is a technical description of how the fair-share scheduler operates: the underpinnings of all modern hypervisors. The privately defined performance metrics were absolutely incomprehensible; not the least because even conventional metrics, like utilization, were privately redefined.

Fave (b) example is Solaris load average, which got busted by some genius “improving” the code. Since the code change was never mentioned in the release notes (why bother users with such details?), anyone relying on that metric(s) for capacity planning was instantly screwed.

There is no virtue in reinventing the wheel only end up with an irregular polygon.

Dr. Gunther,

Thanks for your comments. I actually don’t call Little’s Law queueing theory myself, but when I’ve discussed the equation with people they often end up calling it queueing theory. I half-consciously considered explaining that, but couldn’t think of a way to do it without sounding condescending.

Busy-time and weighted-time are actually terms I pulled from Linux’s /proc/diskstats documentation and got in the habit of using. I later learned about Little’s Law and the term “residence time,” but I have never found a better term for what I call weighted time. Everything else I’ve heard is really wordy, like “total execution time of all requests”, but that is ambiguous because it usually refers to the time from a request’s arrival to completion, whereas the observation time period might bisect one or more requests.

Ultimately I derive everything from arrival and completion times (points in time, not durations), so I’m down to two metrics too Are yours the same as mine?

Are yours the same as mine?

Hi Baron,

Coincidently, I just happened to present something on this same topic at CMG Atlanta

http://perfdynamics.blogspot.com/2011/03/cmg-atlanta-southbound-to-deep-south.html

I claim there are only 3 fundamental performance metrics:

1. A number or a count (e.g., RSS, queries)

2. Time (which comes in several flavors; some of which you mention)

3. Rate or number of things issued per unit time (e.g., baud, TPS)

Everything else is derived. Actually, I can boil it down to 2 metrics but that

requires that you understand how to do dimensional analysis.

I’ll be discussing this stuff in more detail in my Guerrilla Boot Camp class, later this week

http://www.perfdynamics.com/Classes/schedule.html

Some other nits:

o Little’s Law, “sometimes also referred to as the fundamentals of queueing theory”

actually has nothing to do with queueing theory. John D. Little was a business school professor.

o “total time during which queries resided in the system — the busy time.”

That’s actually the *residence time*, not the busy time.

o I don’t understand what you mean by “weighted time.” I never heard of such a thing.

–njg

You never fail to impress Baron. This is going to be a great talk.

This is a great article. Can’t wait for the second part to be published. Also why MySQL did not implement such simple counters internally yet?

One thing I always liked about the Oracle metrics is that, in addition to telling me the concurrency level for a particular timeslice, they also gave the *what the queries were doing*.

So what I’d like to be able to pull from mysql is (logically at least):

For the timeslice 12:00 -> 12:00:01

Queries in CPU wait = 3

Queries in IO wait = 2

Queries waiting on Query Cache put = 1

Queries waiting on Mutex = 1

Total concurrency = 7

These metrics aren’t available in MySQL at this time. Later I’ll show how to measure them externally.

Any thoughts on providing a database table and accompanying procedures/triggers to begin logging the four fundamental metrics so that readers may implement this and derive the additional four long-term average metrics?